Benchmarking Tools Overview

BeginnerBuilding on our qualitative benchmarking framework, this tutorial presents a comprehensive methodology and toolset for quantitatively evaluating various GenAI tools in addressing diverse AM-related tasks.

Key Concepts:

- Quantitative Evaluation: Measurable metrics for objective assessment of GenAI model performance

- Comprehensive Framework: Tools that evaluate across agnostic, domain-specific, and problem-specific dimensions

- Comparative Analysis: Methods to systematically benchmark leading GenAI models including GPT-4o, GPT-4 turbo, o1, o-3 mini, Gemini, and Llama 3

- Practical Tools: Software implementations to streamline the evaluation process

This tutorial will guide you through the process of setting up and using our benchmarking tools to evaluate different GenAI models for your specific AM applications, helping you make informed decisions about which models best suit your needs.

Quantitative Metrics for Evaluation

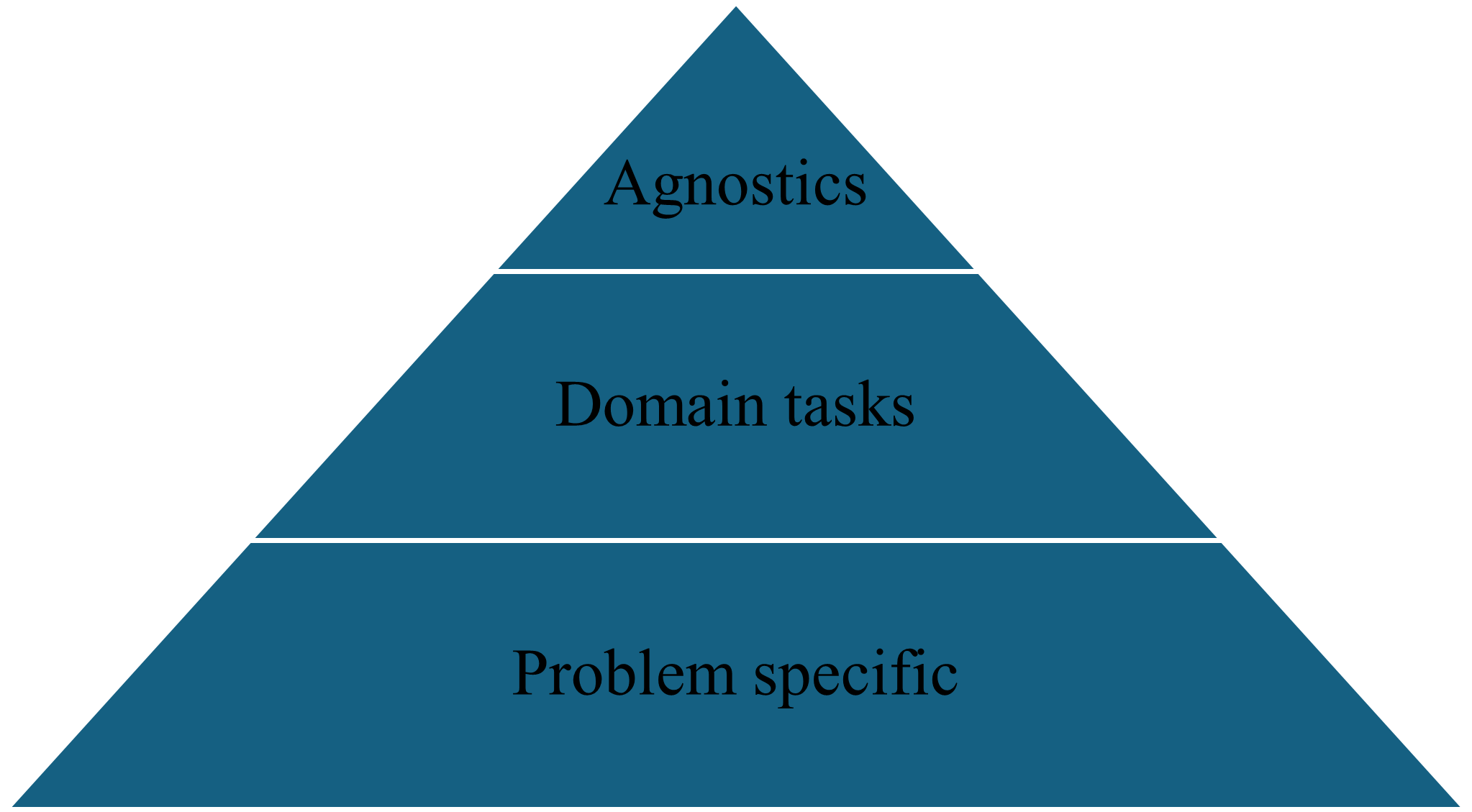

BeginnerOur benchmarking approach uses quantitative metrics across three categories to provide objective measurements of GenAI performance in AM contexts. These metrics are designed to be reproducible and comparable across different evaluations.

The three categories of quantitative metrics used in our benchmarking framework

Metric Categories

Agnostic Metrics

Model-independent metrics like response time, token efficiency, and consistency across multiple queries.

- Response Time (ms)

- Token Efficiency Ratio

- Consistency Score (0-1)

- Hallucination Rate (%)

Domain Metrics

AM-specific metrics measuring knowledge accuracy, terminology precision, and domain understanding.

- Domain Knowledge Score (0-100)

- Terminology Precision (%)

- Technical Accuracy Rate (%)

- Reference Accuracy Score

Problem Metrics

Task-specific metrics evaluating performance on particular AM challenges like design optimization or defect detection.

- Solution Quality Score (0-100)

- Design Feasibility Rating

- Parameter Optimization Score

- Defect Recognition Accuracy (%)

Each metric is defined with specific calculation methods and normalization procedures to ensure consistency across evaluations. The metrics can be weighted according to your specific priorities when evaluating models for particular applications.

Evaluation Framework Architecture

IntermediateOur benchmarking framework provides a structured approach to model evaluation that can be consistently applied across different GenAI models and AM applications.

Framework Components

- Test Suite Generator: Creates standardized test cases for AM tasks

- Model Interface Layer: Provides consistent interaction with different GenAI models

- Response Analyzer: Extracts and processes model outputs

- Metrics Calculator: Computes quantitative metrics from responses

- Visualization Engine: Generates comparative visualizations of results

- Reporting Module: Produces detailed evaluation reports

Evaluation Process

- Definition Phase: Set evaluation objectives and select relevant metrics

- Test Generation: Create standardized test cases for AM tasks

- Model Testing: Run tests across all models in consistent environment

- Data Collection: Gather responses and performance measurements

- Analysis: Calculate metrics and comparative statistics

- Visualization: Generate charts and tables for analysis

- Reporting: Create comprehensive evaluation reports

Evaluation Dimensions

Our framework evaluates models along multiple dimensions to provide a comprehensive assessment:

- Performance: How well the model handles different AM tasks

- Efficiency: Computational resources required and response times

- Knowledge: Depth and accuracy of AM-specific information

- Adaptability: Ability to handle variations in queries and problems

- Usability: Ease of integration and practical application

Benchmarking Tools Implementation

AdvancedWe've developed a suite of tools to implement the benchmarking framework and streamline the evaluation process. These tools automate many aspects of the evaluation, from test case generation to results analysis.

Tool Components

GenAI-AM-Bench Suite

Core benchmarking framework and coordination tools.

Test Case Generator

Creates standardized AM test cases with expected responses.

Model API Wrapper

Provides uniform interface to different GenAI models.

Metrics Analyzer

Calculates and aggregates quantitative metrics from model responses.

Visualization Dashboard

Interactive visualization of benchmarking results.

from genai_am_bench import BenchmarkRunner, ModelRegistry, TestSuite

from genai_am_bench.metrics import MetricsCalculator

from genai_am_bench.visualization import ResultsVisualizer

# Initialize the benchmark runner

runner = BenchmarkRunner()

# Register models to evaluate

runner.register_model("gpt-4o", ModelRegistry.GPT4O, api_key=config.OPENAI_API_KEY)

runner.register_model("gemini", ModelRegistry.GEMINI, api_key=config.GEMINI_API_KEY)

runner.register_model("llama3", ModelRegistry.LLAMA3, model_path=config.LLAMA3_MODEL_PATH)

# Load or generate test suite

test_suite = TestSuite.from_json("am_test_cases.json")

# Or generate programmatically:

# test_suite = TestSuite.generate(tasks=["design_optimization", "parameter_selection", "defect_detection"])

# Run the benchmark

results = runner.run_benchmark(

test_suite=test_suite,

metrics=["response_time", "token_efficiency", "domain_knowledge", "solution_quality"],

trials=5, # Run each test multiple times for statistical significance

parallel=True, # Run tests in parallel for efficiency

timeout=60 # Maximum time (seconds) to wait for model response

)

# Calculate aggregate metrics

metrics_calc = MetricsCalculator()

aggregated_results = metrics_calc.calculate_aggregates(results)

# Generate visualizations

visualizer = ResultsVisualizer()

visualizer.generate_comparison_chart(aggregated_results, "Model Comparison by Metric")

visualizer.generate_radar_chart(aggregated_results, "Model Capability Profile")

# Export results

results.to_csv("benchmark_results.csv")

visualizer.save_charts("benchmark_visuals.html")Implementation Guide

IntermediateFollow these steps to implement the benchmarking tools and evaluate GenAI models for your AM applications:

Step 1: Set Up Environment

- Clone the repository:

git clone https://github.com/nowrin0102/GenAI-Metrics-for-Additive-Manufacturing - Install dependencies:

pip install -r requirements.txt - Configure API keys for models in

config.py - Install any model-specific dependencies (e.g., Llama requires specific packages)

Step 2: Define Evaluation Objectives

- Identify specific AM tasks you want to evaluate (e.g., design optimization, process parameter selection)

- Select relevant metrics based on your priorities

- Determine evaluation scale (how many test cases, repetitions, etc.)

- Define acceptable performance thresholds

Step 3: Prepare Test Cases

- Use provided test case templates or create your own

- Ensure consistent format across all test cases

- Include a mix of difficulty levels

- Provide reference solutions or evaluation criteria

Step 4: Run Benchmarks

- Configure the benchmark runner with your models and settings

- Execute the benchmark suite

- Monitor for any API rate limiting or errors

- Store raw results for detailed analysis

Step 5: Analyze & Visualize Results

- Calculate aggregate metrics using the provided tools

- Generate comparative visualizations

- Identify strengths and weaknesses of each model

- Consider statistical significance of differences

Common Implementation Challenges

API Rate Limits

Many GenAI APIs have rate limits that can impact testing at scale.

Solution: Implement rate limiting, batch processing, and exponential backoff in your test runner. The provided tools include these features by default.

Response Variability

GenAI models may produce different responses to the same query.

Solution: Run multiple trials of each test case and use statistical methods to analyze the distribution of results. Our framework includes tools for handling this variability.

Benchmarking Case Study

BeginnerTo demonstrate the application of our benchmarking tools, we conducted a comprehensive evaluation of six leading GenAI models on AM-specific tasks.

Evaluation Setup

- Models Evaluated: GPT-4o, GPT-4 turbo, o1, o-3 mini, Gemini, Llama 3

- Test Cases: 25 AM-specific scenarios across design, process, and quality domains

- Metrics: Full suite of agnostic, domain, and problem metrics

- Trials: 3 repetitions per test case for statistical robustness

Key Findings

Response Quality

GPT-4o and o1 consistently produced the highest quality responses for AM-specific tasks, with particular strength in design optimization scenarios.

Domain Knowledge

All models showed reasonable AM knowledge, but GPT-4o demonstrated superior understanding of process-specific details and technical terminology.

Efficiency Tradeoffs

Smaller models like o-3 mini offered significantly faster response times with only moderate reduction in quality for simpler tasks.

Task Specialization

Different models excelled at different tasks: Gemini performed exceptionally well on material selection tasks, while Llama 3 showed strengths in parameter optimization.

Run Your Own Benchmarks

Explore our benchmarking tools and conduct your own evaluations using the GitHub repository and documentation:

Additional Resources

BeginnerTo help you implement effective benchmarking for GenAI models in your AM workflows, we've compiled these additional resources:

Documentation and Guides

- Comprehensive API Documentation for all benchmarking tools

- Test Case Creation Guide for developing effective AM-specific test scenarios

- Model Integration Tutorials for connecting to various GenAI APIs

- Results Interpretation Guide for making sense of benchmarking data

Supplementary Tools

- Test Case Generator with templates for common AM tasks

- Results Visualizer for creating advanced custom visualizations

- API Cost Calculator for estimating expenses of large-scale benchmarking

- Benchmark Scheduler for distributed testing across timeframes

Model Evaluation Datasets

Standardized datasets specifically designed for benchmarking GenAI models on AM tasks.

Access DatasetsPrompt Engineering Techniques

Learn how different prompting approaches can affect benchmarking results.

Explore Techniques